Project

Running Machine Learning on a PLC

Development of a real-time capable PLC that can run ML and control applications

Request/problem:

The customer wants to integrate Machine Learning functionality into their machine control without compromising its real-time performance. The application is supposed to recognize human hand gestures from live video and take appropriate actions in the control application. The system must be performant enough to ensure the real-time control as well as achieve sufficiently low inference speeds at high accuracy. The communication of the control application and the gesture recognition must be established to enable interaction between human and machine via gestures. Ultimately, usability of the system must be evaluated in form of a real machine demonstrator.

Solution:

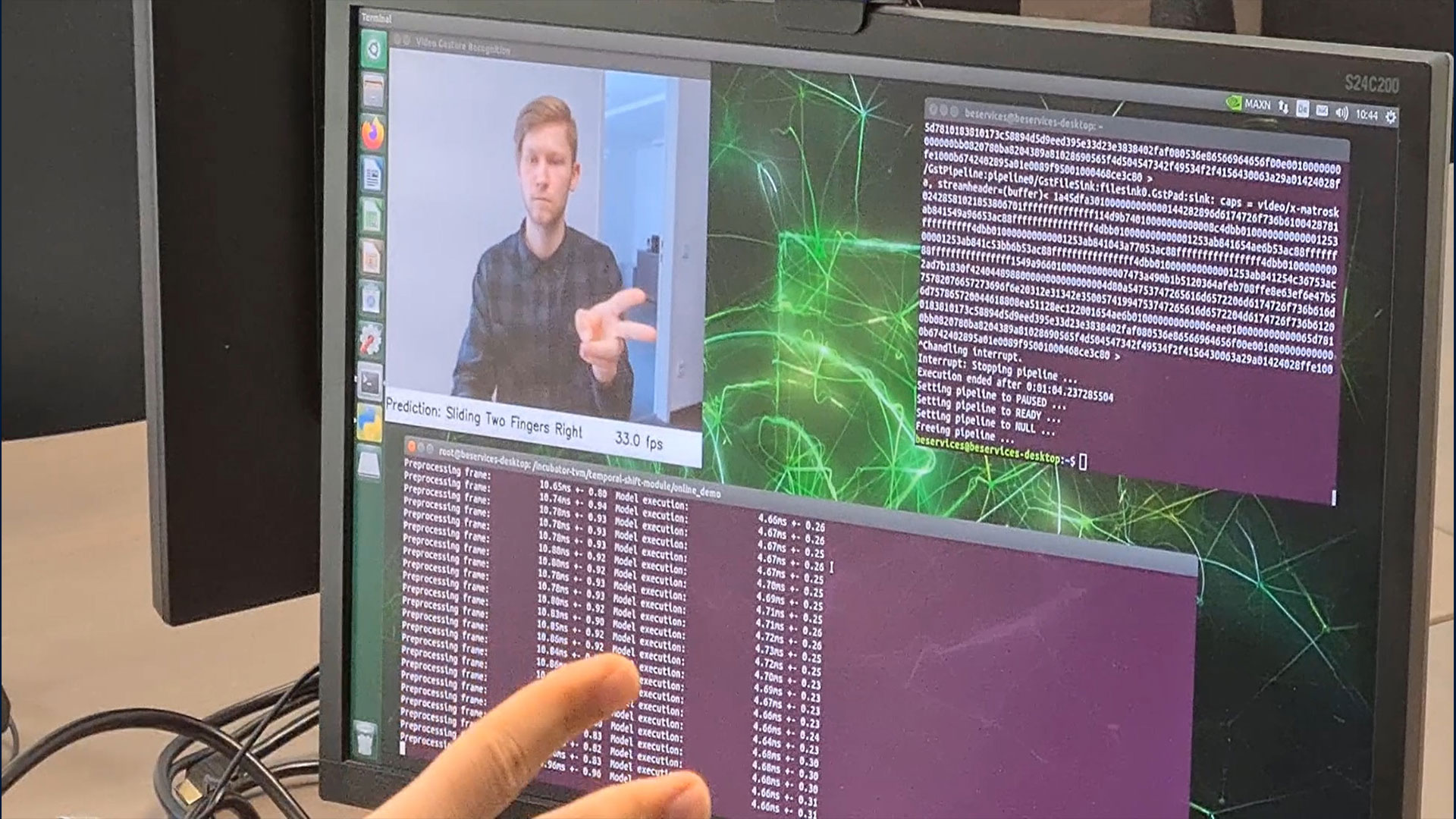

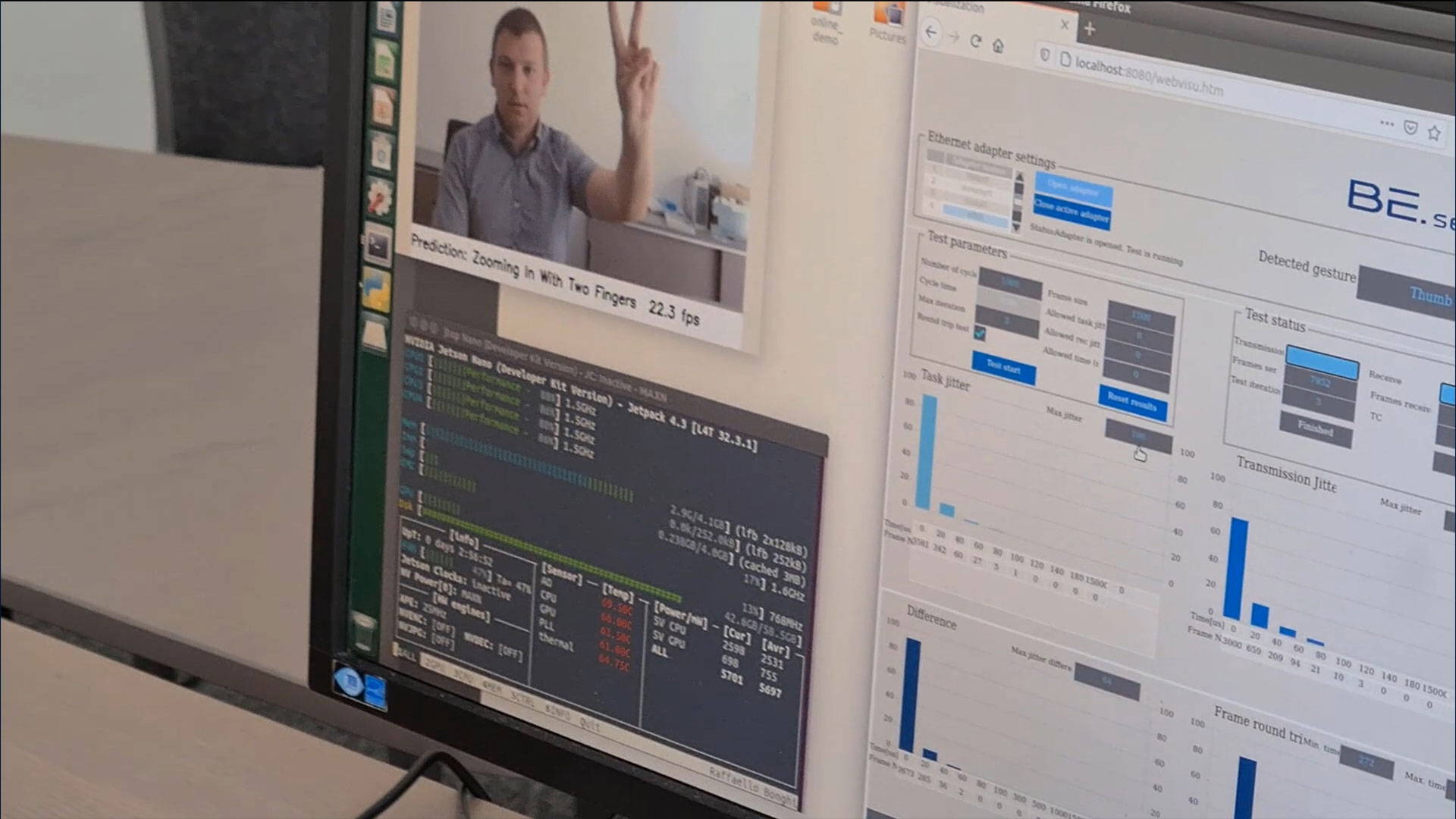

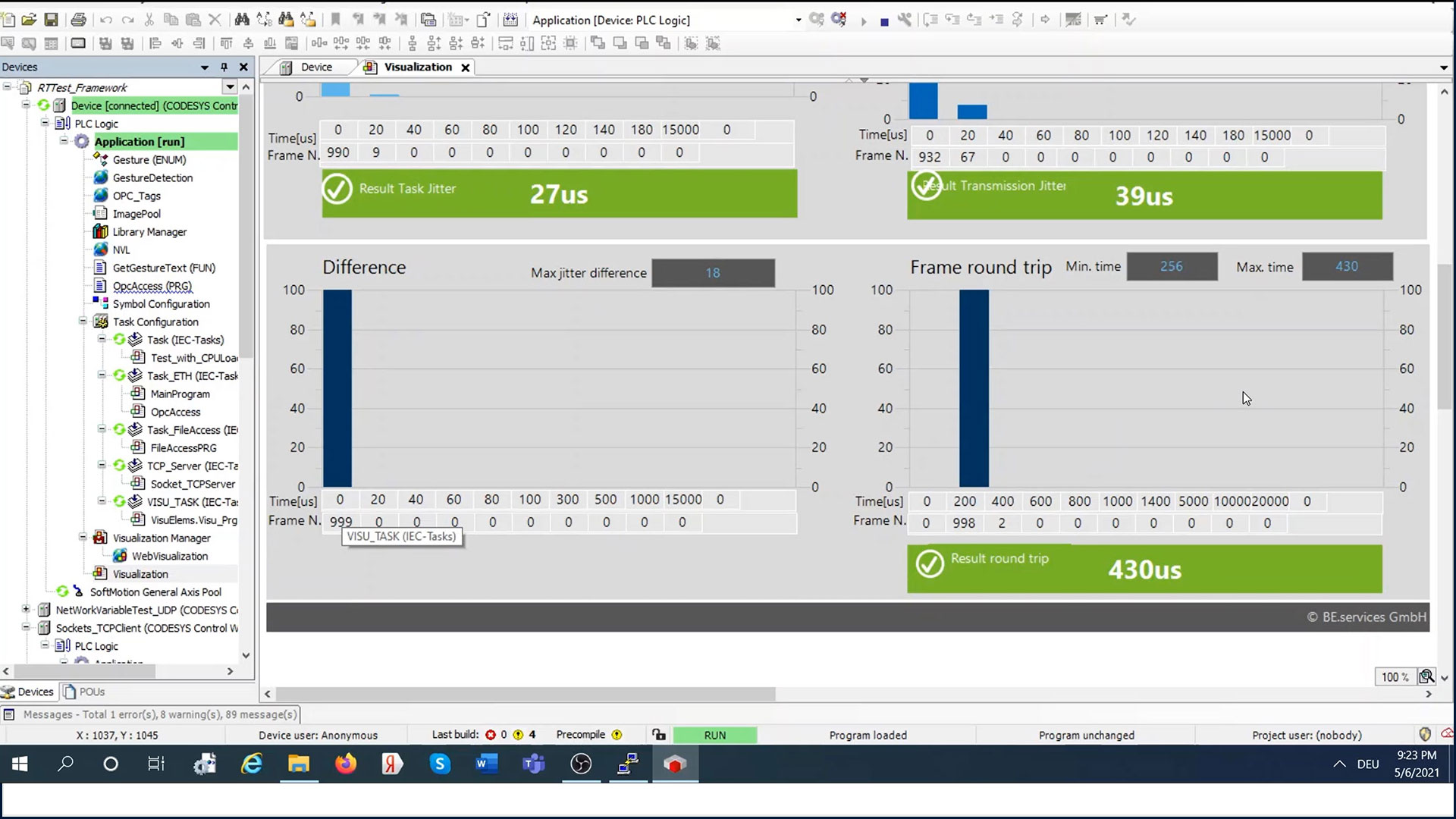

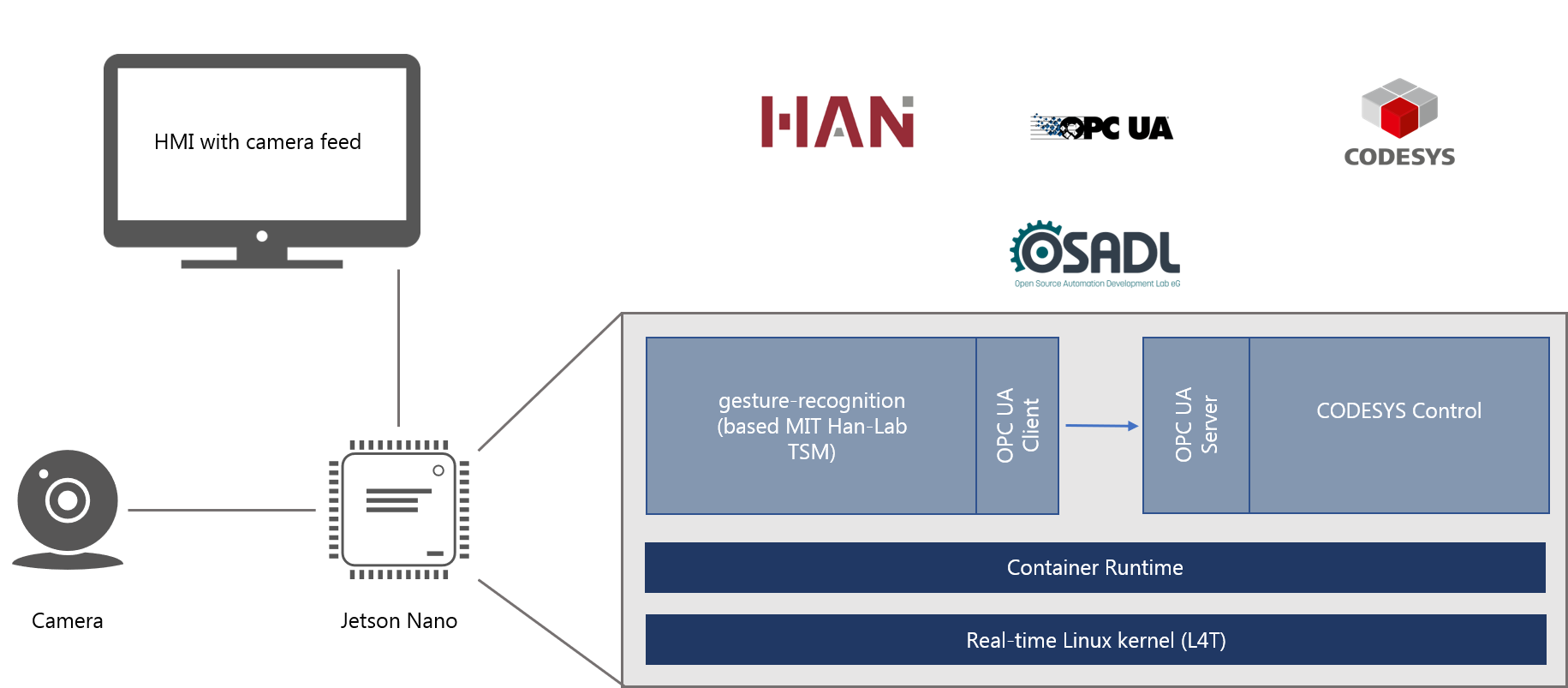

The Jetson Nano platform was chosen for this project, as it provides GPU acceleration of AI workloads in an embedded form factor. At first a baseline of real-time performance is established by testing the standard L4T kernel with and without ML workload. The same testing is then performed after adding the PREEMPT-RT patch for Linux and building the patched Linux kernel. Both measurements are then compared. The measurements are performed using our own proprietary Real-Time Test Framework. For the gesture recognition, two pre-trained CNN models are evaluated and the best performing one is deployed within a Python program that classifies individual frames from a webcam connected to the Jetson Nano. This application is running inside an individual Docker container. For the machine control, CODESYSControl runtime is installed inside a Docker container from a Debian package. The configuration and boot application necessary for the runtime are persisted in Docker volumes. The runtime system includes an OPC UA server that can be reached via its respective port, which serves as the interface between gesture recognition and machine control. Communication between the containers is implemented via OPC UA. More specifically, the recognized gesture gets written to a variable of the OPC UA server, which is in turn used in the logic of the control program. Both containers are combined to a microservice which can be started using docker-compose tool.

Architecture:

Results:

With the Real-Time testing framework, it was established that the Jetson Nano with the standard L4T kernel is not real-time capable without the ML workload and all metrics are significantly worse when the ML workload is added. However, after adding the PREEMPT-RT patch, the real-time performance could be achieved, even while compute-intensive ML workloads are executed. The ML workload in this case is a CNN based on a MobileNetV2 that was able to perform gesture classification on a live stream video with a framerate of around 30 fps with good accuracy. After successful testing, the system was deployed to control a demonstrator machine. The communication between the containers via OPC UA worked well and there was no noticeable delay between detected gesture and the respective response from the demonstrator. Thus, the concept of combining PLC with Machine Learning functionality was proven to be successful.

More information in the whitepaper

Whitepaper